As a software engineer who’s been in the trenches for over a decade, I’ve handled countless file uploads to Amazon S3. Today, I’ll share everything you need to know about uploading files to S3 using Node.js, complete with practical examples and best practices.

📌 Table of Contents

- Basic S3 Upload

- Multipart Upload

- Presigned URLs

- Stream Upload

- Best Practices

- FAQ Section

🎯 Basic S3 Upload

Let’s start with the simplest approach using the AWS SDK for JavaScript. First, install the required package:

npm install @aws-sdk/client-s3Here’s a basic upload example:

import { S3Client, PutObjectCommand } from "@aws-sdk/client-s3";

import fs from 'fs';

const s3Client = new S3Client({

region: "us-east-1",

credentials: {

accessKeyId: "YOUR_ACCESS_KEY",

secretAccessKey: "YOUR_SECRET_KEY"

}

});

async function uploadFile(filePath, bucketName, key) {

try {

const fileContent = fs.readFileSync(filePath);

const command = new PutObjectCommand({

Bucket: bucketName,

Key: key,

Body: fileContent

});

const response = await s3Client.send(command);

console.log("Upload successful!", response);

} catch (err) {

console.error("Error uploading file:", err);

}

}🔄 Multipart Upload

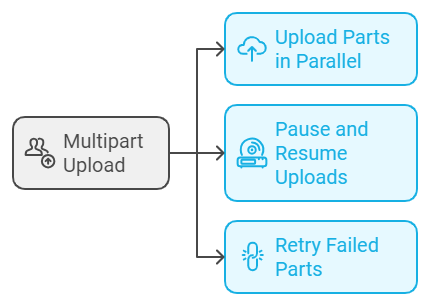

For larger files (>100MB), multipart upload is your best friend! It allows you to:

- Upload parts in parallel

- Pause and resume uploads

- Retry failed parts

import {

CreateMultipartUploadCommand,

UploadPartCommand,

CompleteMultipartUploadCommand

} from "@aws-sdk/client-s3";

async function multipartUpload(filePath, bucketName, key) {

const fileStream = fs.createReadStream(filePath);

const chunkSize = 5 * 1024 * 1024; // 5MB chunks

// Initialize multipart upload

const multipartUpload = await s3Client.send(

new CreateMultipartUploadCommand({

Bucket: bucketName,

Key: key

})

);

// Upload parts

const uploadPromises = [];

let partNumber = 1;

// Read and upload chunks

// ... (implementation details)

}🔐 Presigned URLs

Presigned URLs are perfect for secure client-side uploads. They allow users to upload directly to S3 without exposing your AWS credentials.

import { getSignedUrl } from "@aws-sdk/s3-request-presigner";

async function generatePresignedUrl(bucketName, key) {

const command = new PutObjectCommand({

Bucket: bucketName,

Key: key

});

const signedUrl = await getSignedUrl(s3Client, command, {

expiresIn: 3600 // URL expires in 1 hour

});

return signedUrl;

}📤 Stream Upload

Streaming is efficient for handling large files without loading them entirely into memory:

import { Upload } from "@aws-sdk/lib-storage";

async function streamUpload(filePath, bucketName, key) {

const fileStream = fs.createReadStream(filePath);

const upload = new Upload({

client: s3Client,

params: {

Bucket: bucketName,

Key: key,

Body: fileStream

}

});

try {

const result = await upload.done();

console.log("Upload completed:", result);

} catch (err) {

console.error("Upload failed:", err);

}

}💡 Best Practices

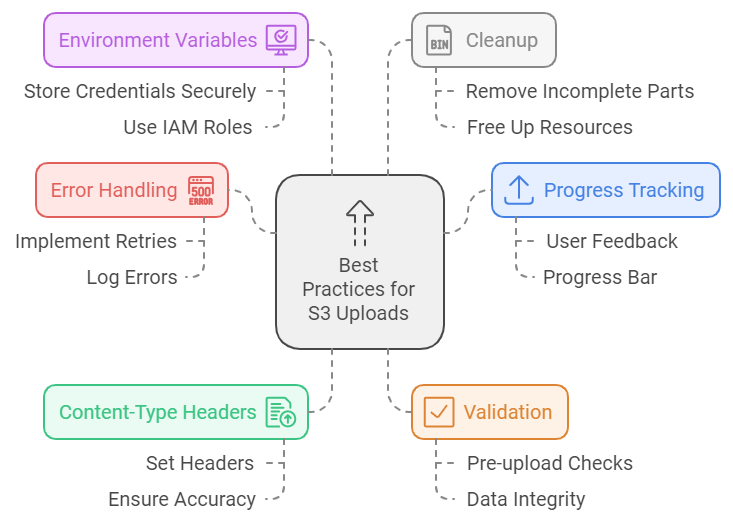

- Always use error handling and retries

- Implement progress tracking for better user experience

- Use content-type headers appropriately

- Consider implementing validation before upload

- Use environment variables for AWS credentials

- Implement proper cleanup for failed multipart uploads

❓ FAQ Section

What’s the maximum file size for S3 uploads?

The maximum file size for a single PUT operation is 5GB. For larger files, you must use multipart upload, which supports up to 5TB.

How can I secure my S3 uploads?

Use bucket policies, IAM roles, and consider implementing server-side encryption. Check out the AWS S3 security documentation.

Should I use presigned URLs or direct upload?

Use presigned URLs for client-side uploads and direct upload for server-side operations. Presigned URLs are more secure for client-side implementations.

How can I handle upload progress?

You can track progress using the progress event in the Upload class:

upload.on("httpUploadProgress", (progress) => {

const percentage = (progress.loaded / progress.total) * 100;

console.log(`Upload progress: ${percentage.toFixed(2)}%`);

});🔗 Useful Resources

Remember to always consider your specific use case when choosing an upload approach. Whether you’re building a small application or a large-scale system, S3 provides the flexibility and reliability you need for file storage.

Happy coding! 🚀 Feel free to reach out if you have any questions about implementing these solutions in your projects.

Next: 10X Your Website Speed: The Ultimate CloudFront S3 Setup Guide